- EXTRACT LINKS FROM A WEB PAGE HOW TO

- EXTRACT LINKS FROM A WEB PAGE INSTALL

- EXTRACT LINKS FROM A WEB PAGE CODE

- EXTRACT LINKS FROM A WEB PAGE FREE

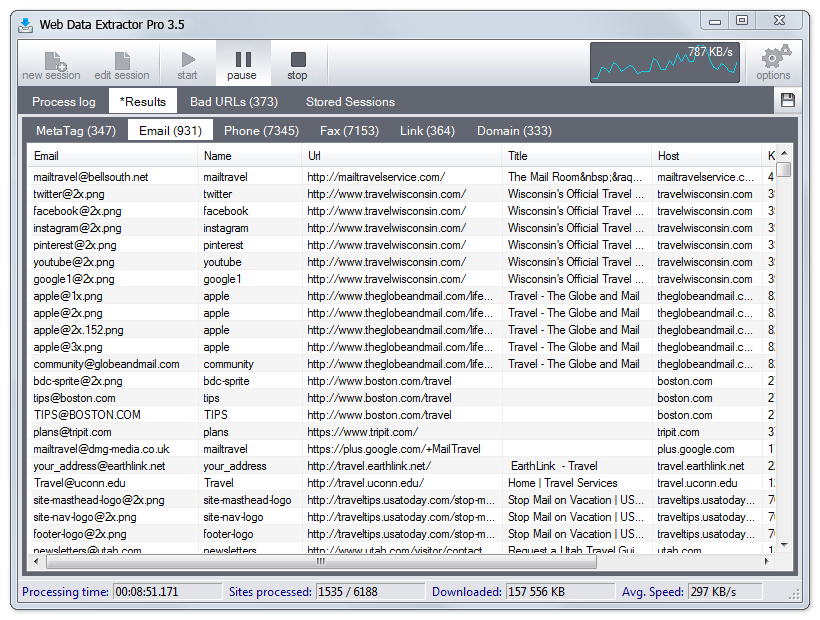

You can extract content from any web page, manage scraped data and even repair data scraping on the scraped links. Lynx a text based browser is perhaps the simplest. Scrapio is a no-code web scraper that helps business automate their workflow and spend less time on data extraction. Running the tool locallyĮxtracting links from a page can be done with a number of open source command line tools. Some of the most important tasks for which linkextractor is used are below To find out calculate external and internal link on your webpage.

EXTRACT LINKS FROM A WEB PAGE FREE

It is 100 free SEO tools it has multiple uses in SEO works.

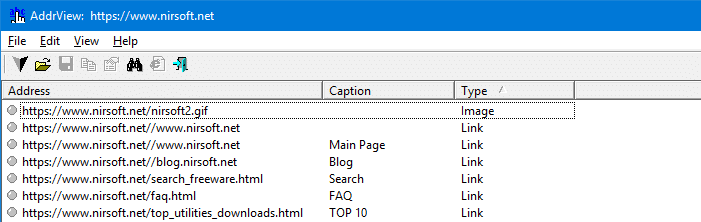

The API is simple to use and aims to be a quick reference tool like all our IP Tools there is a limit of 100 queries per day or you can increase the daily quota with a Membership. link extractor tool is used to scan and extract links from HTML of a web page. Rather than using the above form you can make a direct link to the following resource with the parameter of ?q set to the address you wish to extract links from. API for the Extract Links ToolĪnother option for accessing the extract links tool is to use the API. It was first developed around 1992 and is capable of using old school Internet protocols, including Gopher and WAIS, along with the more commonly known HTTP, HTTPS, FTP, and NNTP. Link Extractor tool extracts all the web page URLs by using its source code. Being a text-based browser you will not be able to view graphics, however, it is a handy tool for reading text-based pages. We do not check the content of the document referenced by this link. Lynx can also be used for troubleshooting and testing web pages from the command line. What links do we extract Our service parses the provided website page and discover all anchor href attributes. Click the Extract button, Scanned URLs will show in the result section in just a few seconds. Just paste OR Enter a valid URL in the free online link extractor tool. This is a text-based web browser popular on Linux based operating systems. Link Extractor tool extracts all the web page URLs by using its source code. The tool has been built with a simple and well-known command line tool Lynx. From Internet research, web page development to security assessments, and web page testing. Reasons for using a tool such as this are wide-ranging. Listing links, domains, and resources that a page links to tell you a lot about the page. In this case, you can check the AJAX option to allow Octoparse to extract content from dynamic web pages. Ajax allows the webpage to send and receive data from the background without interfering with the webpage display. This tool allows a fast and easy way to scrape links from a web page. It is often the case that the website will apply AJAX technique. For extraction of hyperlinks, you just need to define the selector. After all this use for loop in the external URLs to get the href in the link and at last your terminal will the print the number externals link or URLs if present in the webpage.No Links Found About the Page Links Scraping Tool To extract url from a webpage or any string is quite easy with Agentys chrome extension. Next by using the BoutifulSoup module get the parse HTML page and then get all the tags by setting the external URLs in a set. In this tutorial, as you can see that the first step is to import the necessary modules then get the page URL and send get request. Html_page = BeautifulSoup(response.text, "html.parser")

EXTRACT LINKS FROM A WEB PAGE CODE

Let the see the code given below to understand the concept of extracting the external links or URLs from a webpage using Python: #import the modules Below is the implementation of the code of extracting the external links or URLs using an example: Example

EXTRACT LINKS FROM A WEB PAGE INSTALL

This installation of the Python module can be done using the command given below: pip install beautifulsoup4Īs we are interested in extracting the external URLs of the web page, we will need to define an empty Python set, namely external_urls. pip install requestsīs4 module of Python allows you to pull or extract the data out of HTML and XML files. You can install this module by using the following command. If you want to remove duplicate URLs, please use our Remove Duplicate.

This module of Python allows you to make HTTP requests. Online Webpage URL Link Extractor Link Extractor Tool to Extract URLs from Web Page. This article’s first and most important part is installing the required modules and packages on your terminal. So, with the help of web scraping let us learn and explore the process of extracting the external links and URLs from a webpage. We can extract all the external links or URLs from a webpage using one of the very powerful tools of Python, known as Web scraping.

EXTRACT LINKS FROM A WEB PAGE HOW TO

In this tutorial, we will see how to extract all the external links or URLs from a webpage using Python.

0 kommentar(er)

0 kommentar(er)